|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

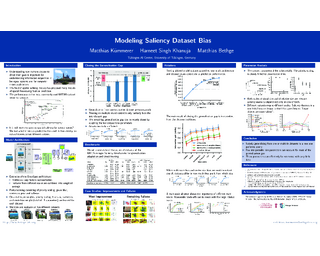

Recent advances in image-based saliency prediction are approaching gold standard performance levels on existing benchmarks. Despite this success, we show that predicting fixations across multiple saliency datasets remains challenging due to dataset bias. We find a significant performance drop (around 40%) when models trained on one dataset are applied to another. Surprisingly, increasing dataset diversity does not resolve this *inter-dataset gap*, with close to 60% attributed to dataset-specific biases. To address this remaining *generalization gap*, we propose a novel architecture extending a mostly dataset-agnostic encoder-decoder structure with fewer than 20 dataset-specific parameters that govern interpretable mechanisms such as multi-scale structure, center bias, and fixation spread. Adapting only these parameters to new data accounts for more than 75% of the generalization gap, with a large fraction of the improvement achieved with as few as 50 samples. Our model sets a new state-of-the-art on all three datasets of the MIT/Tuebingen Saliency Benchmark (MIT300, CAT2000, and COCO-Freeview), even when purely generalizing from unrelated datasets, but with a substantial boost when adapting to the respective training datasets. The model also provides valuable insights into spatial saliency properties, revealing complex multi-scale effects that combine both absolute and relative sizes.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

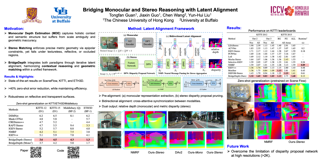

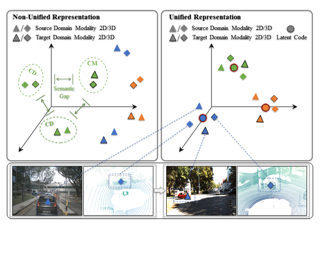

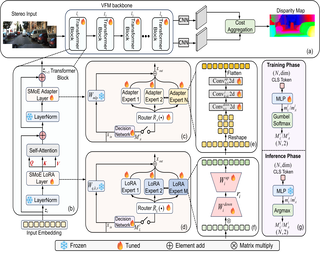

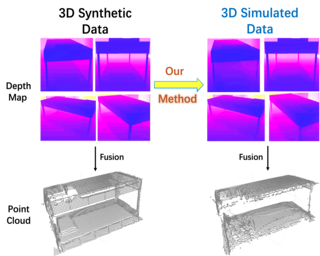

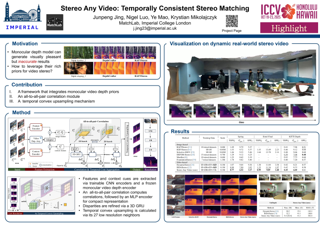

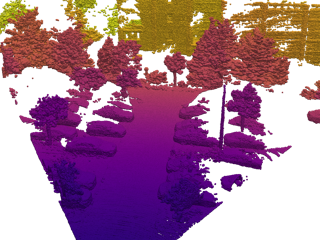

Monocular and stereo depth estimation offer complementary strengths: monocular methods capture rich contextual priors but lack geometric precision, while stereo approaches leverage epipolar geometry but struggle with ambiguities such as reflective or textureless surfaces.Despite their synergies, these paradigms remain largely disjoint in practice.We introduce OmniDepth, a unified framework that bridges both through iterative bidirectional alignment of their latent representations.At its core, a novel cross-attentive alignment mechanism dynamically synchronizes monocular contextual cues with disparity hypothesis representations during stereo reasoning.This mutual alignment resolves stereo ambiguities (e.g., specular surfaces) by injecting monocular structure priors while refining monocular depth with stereo geometry.Extensive experiments demonstrate state-of-the-art results: OmniDepth reduces zero-shot generalization error by $\!>\!40\%$ on Middlebury and ETH3D compared to leading stereo methods, while addressing longstanding failure cases on transparent and reflective surfaces.By harmonizing multi-view geometry with monocular context, OmniDepth advances robust 3D perception that transcends modality-specific limitations.Code and models will be released.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

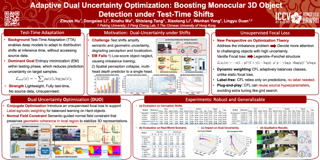

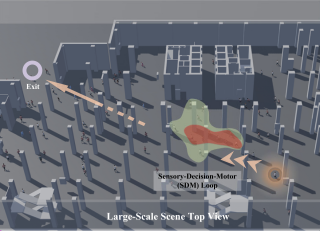

Accurate monocular 3D object detection (M3OD) is pivotal for safety-critical applications like autonomous driving, yet its reliability deteriorates significantly under real-world domain shifts caused by environmental or sensor variations. To address these shifts, Test-Time Adaptation (TTA) methods have emerged, enabling models to adapt to target distributions during inference. While prior TTA approaches recognize the positive correlation between low uncertainty and high generalization ability, they fail to address the dual uncertainty inherent to M3OD: semantic uncertainty (ambiguous class predictions) and geometric uncertainty (unstable spatial localization). To bridge this gap, we propose Dual Uncertainty Optimization (**DUO**), the first TTA framework designed to jointly minimize both uncertainties for robust M3OD. Through a convex optimization lens, we introduce an innovative convex structure of the focal loss and further derive a novel conjugate loss, enabling label-agnostic uncertainty weighting and balanced learning for high-uncertainty objects. In parallel, we design a semantic-aware normal field constraint that preserves geometric coherence in regions with clear semantic cues, reducing uncertainty from the unstable 3D representation. This dual-branch mechanism forms a complementary loop: enhanced spatial perception improves semantic classification, and robust semantic predictions further refine spatial understanding. Extensive experiments demonstrate the superiority of DUO over existing methods across various datasets and …

|

|

Highlight

|

Poster

Yuchen Zhou · Jiayu Tang · Xiaoyan Xiao · Yueyao Lin · Linkai Liu · Zipeng Guo · Hao Fei · Xiaobo Xia · Chao Gou

Exhibit Hall I

Abstract

Modeling task-driven attention in driving is a fundamental challenge for both autonomous vehicles and cognitive science. Existing methods primarily predict where drivers look by generating spatial heatmaps, but fail to capture the cognitive motivations behind attention allocation in specific contexts, which limits deeper understanding of attention mechanisms. To bridge this gap, we introduce Explainable Driver Attention Prediction, a novel task paradigm that jointly predicts spatial attention regions (where), parses attended semantics (what), and provides cognitive reasoning for attention allocation (why). To support this, we present W³DA, the first large-scale explainable driver attention dataset. It enriches existing benchmarks with detailed semantic and causal annotations across diverse driving scenarios, including normal conditions, safety-critical situations, and traffic accidents. We further propose LLada, a Large Language model-driven framework for driver attention prediction, which unifies pixel modeling, semantic parsing, and cognitive reasoning within an end-to-end architecture. Extensive experiments demonstrate the effectiveness of LLada, exhibiting robust …

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

The viewing graph is a compact tool to encode the geometry of multiple views: nodes represent uncalibrated cameras and edges represent fundamental matrices (when available). Most research focuses on theoretical analyses, exploring for which viewing graphs it is possible (in principle) to retrieve cameras from fundamental matrices, in the sense that the problem admits a unique solution for noiseless data. However, the practical task of recovering cameras from noisy fundamental matrices is still open, as available methods are limited to special graphs (such as those covered by triplets). In this paper, we develop the first method that can deal with the recovery of cameras from noisy fundamental matrices in a general viewing graph. Experimental results demonstrate the promise of the proposed approach on a variety of synthetic and real scenarios.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

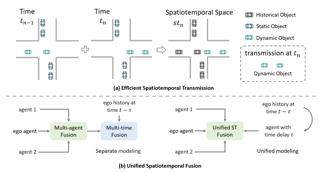

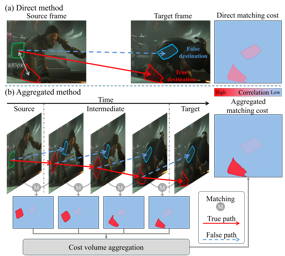

Collaborative perception shares information among different agents and helps solving problems that individual agents may face, e.g., occlusions and small sensing range. Prior methods usually separate the multi-agent fusion and multi-time fusion into two consecutive steps. In contrast, this paper proposes an efficient collaborative perception that aggregates the observations from different agents (space) and different times into a unified spatio-temporal space simultanesouly. The unified spatio-temporal space brings two benefits, i.e., efficient feature transmission and superior feature fusion. 1) Efficient feature transmission: each static object yields a single observation in the spatial temporal space, and thus only requires transmission only once (whereas prior methods re-transmit all the object features multiple times). 2) superior feature fusion: merging the multi-agent and multi-time fusion into a unified spatial-temporal aggregation enables a more holistic perspective, thereby enhancing perception performance in challenging scenarios. Consequently, our Collaborative perception with Spatio-temporal Transformer (CoST) gains improvement in both efficiency and accuracy. Notably, CoST is not tied to any specific method and is compatible with a majority of previous methods, enhancing their accuracy while reducing the transmission bandwidth.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Despite the promise of Multi-Task Learning (MTL) in leveraging complementary knowledge across tasks, existing multi-task optimization (MTO) techniques remain fixated on resolving conflicts through optimizer-centric loss scaling and gradient manipulation, yet fail to deliver consistent gains. In this paper, we argue that the shared representation space, where task interactions naturally occur, offers rich information and potential for operations complementary to existing optimizer designs, especially for facilitating the inter-task complementarity, which is rarely explored in MTO. This intuition leads to Rep-MTL, which exploits the representation-level task saliency to quantify interactions between task-specific optimization and shared representation learning. By steering these saliencies through entropybased penalization and sample-wise cross-task alignment, Rep-MTL aims to mitigate negative transfer by maintaining the effective training of individual tasks instead pure conflict-solving, while explicitly promoting complementary information sharing. Experiments are conducted on four challenging MTL benchmarks covering both task-shift and domain-shift scenarios. The results show that Rep-MTL, even paired with the basic equal weighting (EW) policy, achieves competitive performance gains with favorable efficiency. Beyond standard performance metrics, Power Law (PL) exponent analysis demonstrates Rep-MTL’s efficacy in balancing task-specific learning and cross-task sharing.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

The widespread availability of off-the-shelf machine learning models poses a challenge: which model, of the many available candidates, should be chosen for a given data analysis task?This question of model selection is traditionally answered by collecting and annotating a validation dataset---a costly and time-intensive process.We propose a method for active model selection, using predictions from candidate models to prioritize the labeling of test data points that efficiently differentiate the best candidate. Our method, CODA, performs consensus-driven active model selection by modeling relationships between classifiers, categories, and data points within a probabilistic framework. The framework uses the consensus and disagreement between models in the candidate pool to guide the label acquisition process, and Bayesian inference to update beliefs about which model is best as more information is collected.We validate our approach by curating a collection of 25 benchmark tasks capturing a range of model selection scenarios.CODA outperforms existing methods for active model selection significantly, reducing the annotation effort required to discover the best model by upwards of 50% compared to the previous state-of-the-art. We will make our code and data public.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

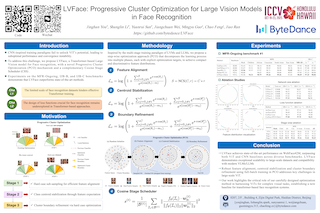

Vision Transformers (ViTs) have revolutionized large-scale visual modeling, yet remain underexplored in face recognition (FR) where CNNs still dominate. We identify a critical bottleneck: CNN-inspired training paradigms fail to unlock ViT's potential, leading to suboptimal performance and convergence instability.To address this challenge, we propose LVFace, a ViT-based FR model that integrates Progressive Cluster Optimization (PCO) to achieve superior results. Specifically, PCO sequentially applies negative class sub-sampling (NCS) for robust and fast feature alignment from random initialization, feature expectation penalties for centroid stabilization, performing cluster boundary refinement through full-batch training without NCS constraints. LVFace establishes a new state-of-the-art face recognition baseline, surpassing leading approaches such as UniFace and TopoFR across multiple benchmarks. Extensive experiments demonstrate that LVFace delivers consistent performance gains, while exhibiting scalability to large-scale datasets and compatibility with mainstream VLMs and LLMs. Notably, LVFace secured 1st place in the ICCV 2021 Masked Face Recognition (MFR)-Ongoing Challenge (March 2025), proving its efficacy in real-world scenarios.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

We derive methods to compute higher order differentials (Hessians and Hessian-vector products) of the rendering operator. Our approach is based on importance sampling of a convolution that represents the differentials of rendering parameters and shows to be applicable to both rasterization and path tracing. We demonstrate that this information improves convergence when used in higher-order optimizers such as Newton or Conjugate Gradient relative to a gradient descent baseline in several inverse rendering tasks.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

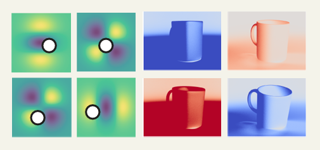

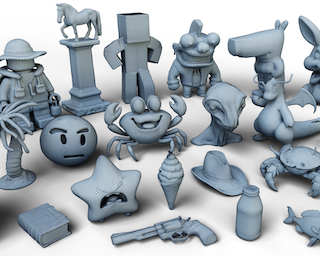

What cannot be measured cannot be improved while likely never uttered by Lord Kelvin, summarizes effectively the purpose of this work. This paper presents a detailed evaluation of automated metrics for evaluating structured 3D reconstructions. Pitfalls of each metric are discussed, and a thorough analyses through the lens of expert 3D modelers' preferences is presented. A set of systematic "unit tests" are proposed to empirically verify desirable properties, and context aware recommendations as to which metric to use depending on application are provided. Finally, a learned metric distilled from human expert judgments is proposed and analyzed.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

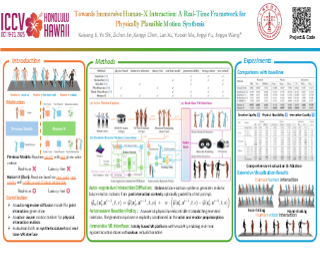

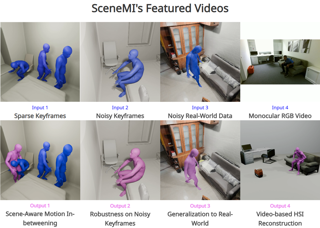

Real-time synthesis of physically plausible human interactions remains a critical challenge for immersive VR/AR systems and humanoid robotics. While existing methods demonstrate progress in kinematic motion generation, they often fail to address the fundamental tension between real-time responsiveness, physical feasibility, and safety requirements in dynamic human-machine interactions. We introduce Human-X, a novel framework designed to enable immersive and physically plausible human interactions across diverse entities, including human-avatar, human-humanoid, and human-robot systems. Unlike existing approaches that focus on post-hoc alignment or simplified physics, our method jointly predicts actions and reactions in real-time using an auto-regressive reaction diffusion planner, ensuring seamless synchronization and context-aware responses. To enhance physical realism and safety, we integrate an actor-aware motion tracking policy trained with reinforcement learning, which dynamically adapts to interaction partners’ movements while avoiding artifacts like foot sliding and penetration. Extensive experiments on the Inter-X and InterHuman datasets demonstrate significant improvements in motion quality, interaction continuity, and physical plausibility over state-of-the-art methods. Our framework is validated in real-world applications, including virtual reality interface for human-robot interaction, showcasing its potential for advancing human-robot collaboration.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

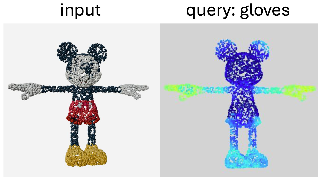

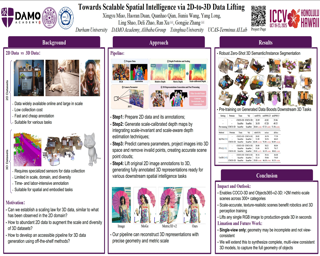

Why don't we have foundation models in 3D yet? A key limitation is data scarcity. For 3D object part segmentation, existing datasets are small in size and lack diversity. We show that it is possible to break this data barrier by building a data engine powered by 2D foundation models. Our data engine automatically annotates any number of object parts: 1755x more unique part types than existing datasets combined. By training on our annotated data with a simple contrastive objective, we obtain an open-world model that generalizes to any part in any object based on any text query. Even when evaluated zero-shot, we outperform existing methods on the datasets they train on. We achieve 260% improvement in mIoU and boost speed by 6x to 300x. Our scaling analysis confirms that this generalization stems from the data scale, which underscores the impact of our data engine. Finally, to advance general-category open-world 3D part segmentation, we release a benchmark covering a wide range of objects and parts.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Compared to image-text pair data, interleaved corpora enable Vision-Language Models (VLMs) to understand the world more naturally like humans. However, such existing datasets are crawled from webpage, facing challenges like low knowledge density, loose image-text relations, and poor logical coherence between images. On the other hand, the internet hosts vast instructional videos (e.g., online geometry courses) that are widely used by humans to learn foundational subjects, yet these valuable resources remain underexplored in VLM training. In this paper, we introduce a high-quality \textbf{multimodal textbook} corpus with richer foundational knowledge for VLM pretraining. It collects over 2.5 years of instructional videos, totaling 22,000 class hours. We first use an LLM-proposed taxonomy to systematically gather instructional videos. Then we progressively extract and refine visual (keyframes), audio (ASR), and textual knowledge (OCR) from the videos, and organize as an image-text interleaved corpus based on temporal order. Compared to its counterparts, our video-centric textbook offers more coherent context, richer knowledge, and better image-text alignment. Experiments demonstrate its superb pretraining performance, particularly in knowledge- and reasoning-intensive tasks like ScienceQA and MathVista. Moreover, VLMs pre-trained on our textbook exhibit outstanding interleaved context awareness, leveraging visual and textual cues in their few-shot context for task solving.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

In this work, we introduce **NoiseQuery** as a novel method for enhanced noise initialization in versatile goal-driven text-to-image (T2I) generation. Specifically, we propose to leverage an aligned Gaussian noise as implicit guidance to complement explicit user-defined inputs, such as text prompts, for better generation quality and controllability. Unlike existing noise optimization methods designed for specific models, our approach is grounded in a fundamental examination of the generic finite-step noise scheduler design in diffusion formulation, allowing better generalization across different diffusion-based architectures in a **tuning-free manner**. This model-agnostic nature allows us to construct a reusable noise library compatible with multiple T2I models and enhancement techniques, serving as a foundational layer for more effective generation. Extensive experiments demonstrate that **NoiseQuery** enables fine-grained control and yields significant performance boosts not only over high-level semantics but also over **low-level visual attributes**, which are typically difficult to specify through text alone, with seamless integration into current workflows with minimal computational overhead.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Recent progress in multimodal large language models has markedly enhanced the understanding of short videos (typically under one minute), and several evaluation datasets have emerged accordingly. However, these advancements fall short of meeting the demands of real-world applications such as embodied intelligence for long-term decision-making, in-depth movie reviews and discussions, and live sports commentary, all of which require comprehension of long videos spanning several hours. To address this gap, we introduce LVBench, a benchmark specifically designed for long video understanding. Our dataset comprises publicly sourced videos and encompasses a diverse set of tasks aimed at long video comprehension and information extraction. LVBench is designed to challenge multimodal models to demonstrate long-term memory and extended comprehension capabilities. Our extensive evaluations reveal that current multimodal models still underperform on these demanding long video understanding tasks. Through LVBench, we aim to spur the development of more advanced models capable of tackling the complexities of long video comprehension.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

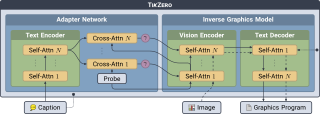

With the rise of generative AI, synthesizing figures from text captions becomes a compelling application. However, achieving high geometric precision and editability requires representing figures as graphics programs in languages like Ti*k*Z, and aligned training data (i.e., graphics programs with captions) remains scarce. Meanwhile, large amounts of unaligned graphics programs and captioned raster images are more readily available. We reconcile these disparate data sources by presenting Ti*k*Zero, which decouples graphics program generation from text understanding by using image representations as an intermediary bridge. It enables independent training on graphics programs and captioned images and allows for zero-shot text-guided graphics program synthesis during inference. We show that our method substantially outperforms baselines that can only operate with caption-aligned graphics programs. Furthermore, when leveraging caption-aligned graphics programs as a complementary training signal, Ti*k*Zero matches or exceeds the performance of much larger models, including commercial systems like GPT-4o. Our code, datasets, and select models will be made publicly available.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

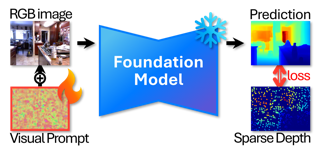

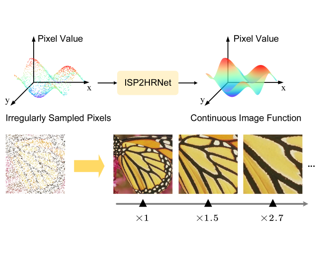

Zero-shot depth completion with metric scales poses significant challenges, primarily due to performance limitations such as domain specificity and sensor characteristics. One recent emerging solution is to integrate monocular depth foundation models into depth completion frameworks, yet these efforts still face issues with suboptimal performance and often require further adaptation to the target task. Surprisingly, we find that a simple test-time training, which fine-tunes monocular depth foundation models on sparse depth measurements from sensors just as it is, yields reasonable results. However, this test-time training obviously incurs high computational costs and introduces biases towards specific conditions, making it impractical for real-world scenarios. In this paper, we introduce a new approach toward parameter-efficient zero-shot depth completion. Our key idea of this work is to leverage visual prompt tuning, achieving sensor-specific depth scale adaptation without forgetting foundational knowledge. Experimental results on diverse datasets demonstrate that our approach outperforms relevant state-of-the-art methods, showing superior generalization and efficiency. Our source code is available in the supplementary materials.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Video summarization is a task of shortening a video by choosing a subset of frames while preserving its essential moments. Despite the innate subjectivity of the task, previous works have deterministically regressed to an averaged frame score over multiple raters, ignoring the inherent subjectivity of what constitutes a "good" summary. We propose a novel problem formulation by framing video summarization as a conditional generation task, allowing a model to learn the distribution of good summaries and to generate multiple plausible summaries that better reflect varying human perspectives. Adopting diffusion models for the first time in video summarization, our proposed method, SummDiff, dynamically adapts to visual contexts and generates multiple candidate summaries conditioned on the input video. Extensive experiments demonstrate that SummDiff not only achieves the state-of-the-art performance on various benchmarks but also produces summaries that closely align with individual annotator preferences. Moreover, we provide a deeper insight with novel metrics from an analysis of the knapsack, which is an important last step of generating summaries but has been overlooked in evaluation.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

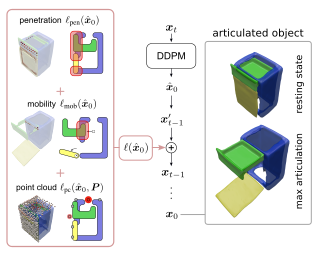

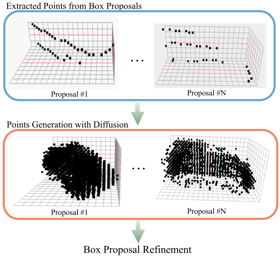

Articulated objects are an important type of interactable objects in everyday environments. In this paper, we propose a novel diffusion model-based approach for generating articulated objects that aligns them with partial point clouds and improves their physical plausibility. The model represents part shapes by signed distance functions (SDFs). We guide the reverse diffusion process using a point cloud alignment loss computed using the predicted SDFs. Additionally, we impose non-penetration and mobility constraints based on the part SDFs for guiding the model to generate more physically plausible objects. We also make our diffusion approach category-aware to further improve point cloud alignment if category information is available. We evaluate the generative ability and constraint consistency of samples generated with our approach using the PartNet-Mobility dataset. We also compare our approach with an unguided baseline diffusion model and demonstrate that our method can improve constraint consistency and provides a tradeoff with generative ability.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

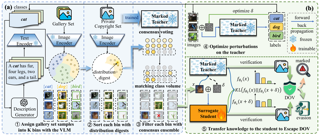

Modern over-parameterized deep models are highly data-dependent, with large scale general-purpose and domain-specific datasets serving as the bedrock for rapid advancements. However, many datasets are proprietary or contain sensitive information, making unrestricted model training problematic. In the open world where data thefts cannot be fully prevented, Dataset Ownership Verification (DOV) has emerged as a promising method to protect copyright by detecting unauthorized model training and tracing illicit activities. Due to its diversity and superior stealth, evading DOV is considered extremely challenging. However, this paper identifies that previous studies have relied on oversimplistic evasion attacks for evaluation, leading to a false sense of security. We introduce a unified evasion framework, in which a teacher model first learns from the copyright dataset and then transfers task-relevant yet identifier-independent domain knowledge to a surrogate student using an out-of-distribution (OOD) dataset as the intermediary. Leveraging Vision-Language Models and Large Language Models, we curate the most informative and reliable subsets from the OOD gallery set as the final transfer set, and propose selectively transferring task-oriented knowledge to achieve a better trade-off between generalization and evasion effectiveness. Experiments across diverse datasets covering eleven DOV methods demonstrate our approach simultaneously eliminates all copyright identifiers and significantly outperforms …

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

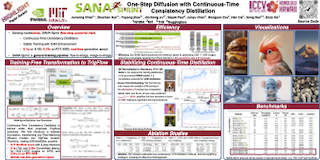

This paper presents SANA-Sprint, an efficient diffusion model for ultra-fast text-to-image (T2I) generation. SANA-Sprint is built on a pre-trained foundation model and augmented with hybrid distillation, dramatically reducing inference steps from 20 to 1-4.We introduce three key innovations: $\textbf{(1)}$ We propose a training-free approach that transforms a pre-trained flow-matching model for continuous-time consistency distillation (sCM), eliminating costly training from scratch and achieving high training efficiency. Our hybrid distillation strategy combines sCM with latent adversarial distillation (LADD): sCM ensures alignment with the teacher model, while LADD enhances single-step generation fidelity. $\textbf{(2)}$ SANA-Sprint is a unified step-adaptive model that achieves high-quality generation in 1-4 steps, eliminating step-specific training and improving efficiency. $\textbf{(3)}$ We integrate ControlNet with SANA-Sprint for real-time interactive image generation, enabling instant visual feedback for user interaction. SANA-Sprint establishes a new Pareto frontier in speed-quality tradeoffs, achieving state-of-the-art performance with 7.59 FID and 0.74 GenEval in just 1 step — outperforming FLUX-schnell (7.94 FID / 0.71 GenEval) while being 10× faster (0.1s vs 1.1s on H100). It also achieves 0.1s (T2I) and 0.25s (ControlNet) latency for 1024$\times$1024 images on H100, and 0.31s (T2I) on an RTX 4090, showcasing its exceptional efficiency and potential for AI-powered consumer applications (AIPC). Code and …

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

We present MeshLLM, a novel framework that leverages large language models (LLMs) to understand and generate text-serialized 3D meshes. Our approach addresses key limitations in existing methods, including the limited dataset scale when catering to LLMs' token length and the loss of 3D structural information during mesh serialization. We introduce a Primitive-Mesh decomposition strategy, which divides 3D meshes into structurally meaningful subunits. This enables the creation of a large-scale dataset with 1500k+ samples, almost 50x larger than previous methods, which aligns better with the LLM scaling law principles. Furthermore, we propose inferring face connectivity from vertices and local mesh assembly training strategies, significantly enhancing the LLMs' ability to capture mesh topology and spatial structures. Experiments show that MeshLLM outperforms the state-of-the-art LLaMA-Mesh in both mesh generation quality and shape understanding, highlighting its great potential in processing text-serialized 3D meshes.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Panoramic optical flow enables a comprehensive understanding of temporal dynamics across wide fields of view. However, severe distortions caused by sphere-to-plane projections, such as the equirectangular projection (ERP), significantly degrade the performance of conventional perspective-based optical flow methods, especially in polar regions. To address this challenge, we propose PriOr-Flow, a novel dual-branch framework that leverages the low-distortion nature of the orthogonal view to enhance optical flow estimation in these regions. Specifically, we introduce the Dual-Cost Collaborative Lookup (DCCL) operator, which jointly retrieves correlation information from both the primitive and orthogonal cost volumes, effectively mitigating distortion noise during cost volume construction. Furthermore, our Ortho-Driven Distortion Compensation (ODDC) module iteratively refines motion features from both branches, further suppressing polar distortions. Extensive experiments demonstrate that PriOr-Flow is compatible with various perspective-based iterative optical flow methods and consistently achieves state-of-the-art performance on publicly available panoramic optical flow datasets, setting a new benchmark for wide-field motion estimation.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

4D Gaussian Splatting (4DGS) has recently emerged as a promising technique for capturing complex dynamic 3D scenes with high fidelity. It utilizes a 4D Gaussian representation and a GPU-friendly rasterizer, enabling rapid rendering speeds. Despite its advantages, 4DGS faces significant challenges, notably the requirement of millions of 4D Gaussians, each with extensive associated attributes, leading to substantial memory and storage cost. This paper introduces a memory-efficient framework for 4DGS. We streamline the color attribute by decomposing it into a per-Gaussian direct color component with only 3 parameters and a shared lightweight alternating current color predictor. This approach eliminates the need for spherical harmonics coefficients, which typically involve up to 144 parameters in classic 4DGS, thereby creating a memory-efficient 4D Gaussian representation. Furthermore, we introduce an entropy-constrained Gaussian deformation technique that uses a deformation field to expand the action range of each Gaussian and integrates an opacity-based entropy loss to limit the number of Gaussians, thus forcing our model to use as few Gaussians as possible to fit a dynamic scene well. With simple half-precision storage and zip compression, our framework achieves a storage reduction by approximately 190$\times$ and 125$\times$ on the Technicolor and Neural 3D Video datasets, respectively, compared to …

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

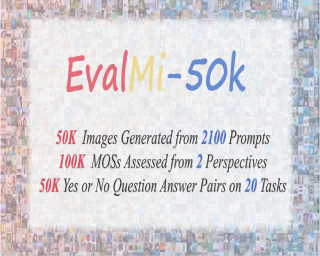

Recent breakthroughs in large multimodal models (LMMs) have significantly advanced both text-to-image (T2I) generation and image-to-text (I2T) interpretation. However, many generated images still suffer from issues related to perceptual quality and text-image alignment. Given the high cost and inefficiency of manual evaluation, an automatic metric that aligns with human preferences is desirable. To this end, we present EvalMi-50K, a comprehensive dataset and benchmark for evaluating large-multimodal image generation,which features (i) comprehensive tasks, encompassing 2,100 extensive prompts across 20 fine-grained task dimensions, and (ii) large-scale human-preference annotations, including 100K mean-opinion scores (MOSs) and 50K question-answering (QA) pairs annotated on 50,400 images generated from 24 T2I models.Based on EvalMi-50K, we propose LMM4LMM, an LMM-based metric for evaluating large multimodal T2I generation from multiple dimensions including perceptual quality, text-image correspondence, and task-specific accuracy.Extensive experimental results show that LMM4LMM achieves state-of-the-art performance on EvalMi-50K, and exhibits strong generalization ability on other AI-generated image evaluation benchmark datasets, manifesting the generality of both the EvalMi-50K dataset and LMM4LMM metric.Both EvalMi-50K and LMM4LMM will be released upon the publication.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

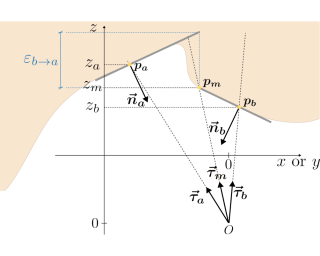

Recovering a 3D surface from its surface normal map, a problem known as normal integration, is a key component for photometric shape reconstruction techniques such as shape-from-shading and photometric stereo. The vast majority of existing approaches for normal integration handle only implicitly the presence of depth discontinuities and are limited to orthographic or ideal pinhole cameras. In this paper, we propose a novel formulation that allows modeling discontinuities explicitly and handling generic central cameras. Our key idea is based on a local planarity assumption, that we model through constraints between surface normals and ray directions. Compared to existing methods, our approach more accurately approximates the relation between depth and surface normals, achieves state-of-the-art results on the standard normal integration benchmark, and is the first to directly handle generic central camera models.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

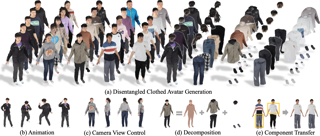

Clothed avatar generation has wide applications in virtual and augmented reality, filmmaking, and more. While existing methods have made progress in creating animatable digital avatars, generating avatars with disentangled components (e.g., body, hair, and clothes) has long been a challenge. In this paper, we propose LayerAvatar, a novel feed-forward diffusion-based method capable of generating high-quality component-disentangled clothed avatars in seconds. We propose a layered UV feature plane representation, where components are distributed in different layers of the Gaussian-based UV feature plane with corresponding semantic labels. This representation can be effectively learned with current feed-forward generation pipelines, facilitating component disentanglement and enhancing details of generated avatars. Based on the well-designed representation, we train a single-stage diffusion model and introduce constrain terms to mitigate the severe occlusion issue of the innermost human body layer. Extensive experiments demonstrate the superior performances of our method in generating highly detailed and disentangled clothed avatars. In addition, we explore its applications in component transfer.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

In recent years, text-to-image (T2I) diffusion models have garnered significant attention for their ability to generate high-quality images reflecting text prompts. However, their growing popularity has also led to the emergence of backdoor threats, posing substantial risks. Currently, effective defense strategies against such threats are lacking due to the diversity of backdoor targets in T2I synthesis. In this paper, we propose NaviDet, the first general input-level backdoor detection framework for identifying backdoor inputs across various backdoor targets. Our approach is based on the new observation that trigger tokens tend to induce significant neuron activation variation in the early stage of the diffusion generation process, a phenomenon we term Early-step Activation Variation. Leveraging this insight, NaviDetdetects malicious samples by analyzing neuron activation variations caused by input tokens. Through extensive experiments, we demonstrate the effectiveness and efficiency of our method against various T2I backdoor attacks, surpassing existing baselines with significantly lower computational overhead. Furthermore, we rigorously demonstrate that our method remains effective against potential adaptive attacks.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

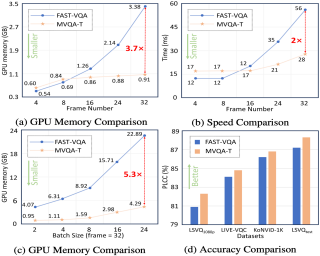

The rapid growth of long-duration, high-definition videos has made efficient video quality assessment (VQA) a critical challenge. Existing research typically tackles this problem through two main strategies: reducing model parameters and resampling inputs. However, light-weight Convolution Neural Networks (CNN) and Transformers often struggle to balance efficiency with high performance due to the requirement of long-range modeling capabilities. Recently, the state-space model, particularly Mamba, has emerged as a promising alternative, offering linear complexity with respect to sequence length. Meanwhile, efficient VQA heavily depends on resampling long sequences to minimize computational costs, yet current resampling methods are often weak in preserving essential semantic information. In this work, we present MVQA, a Mamba-based model designed for efficient VQA along with a novel Unified Semantic and Distortion Sampling (USDS) approach. USDS combines semantic patch sampling from low-resolution videos and distortion patch sampling from original-resolution videos. The former captures semantically dense regions, while the latter retains critical distortion details. To prevent computation increase from dual inputs, we propose a fusion mechanism using pre-defined masks, enabling a unified sampling strategy that captures both semantic and quality information without additional computational burden. Experiments show that the proposed MVQA, equipped with USDS, achieve comparable performance to state-of-the-art methods …

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

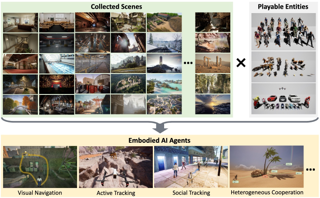

We introduce UnrealZoo, a rich collection of 100 photo-realistic 3D virtual worlds built on Unreal Engine, designed to reflect the complexity and variability of open worlds with scales up to $16 km^2$ landscapes. Additionally, we offer a rich variety of playable entities including humans, animals, robots, and vehicles for embodied AI. We extend UnrealCV with optimized Python APIs and tools for data collection, environment augmentation, distributed training, and benchmarking, achieving significant improvements in the efficiency of rendering and communication, to support advanced applications, such as multi-agent interactions. Our experimental evaluation across complex navigation and tracking tasks reveals two key insights: first, the substantial benefits of the diversity of environments for developing generalizable reinforcement learning (RL) agents; second, the persistent challenges that current embodied agents face in open-world settings. These challenges include transferring to a new embodiment at test time, managing latency in closed-loop control systems for dynamic environments, and effectively reasoning about complex 3D spatial structures in unstructured terrain. UnrealZoo thus provides both a powerful testing ground and a pathway toward more capable embodied AI systems for real-world deployment.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

The commercialization of generative artificial intelligence (GenAI) has led to a multi-level ecosystem involving model developers, service providers, and consumers. Thus, ensuring traceability is crucial, as service providers may violate intellectual property rights (IPR), and consumers may generate harmful content. However, existing methods are limited to single-level attribution scenarios and cannot simultaneously trace across multiple levels. To this end, we introduce a scalable dual fingerprinting method for text-to-image (T2I) models, to achieve traceability of both service providers and consumers. Specifically, we propose 2-headed Fingerprint-Informed Low-Rank Adaptation (FI-LoRA), where each head is controlled by a binary fingerprint and capable of introducing the fingerprints into generated images. In practice, one FI-LoRA head is used by the developer to assign a unique fingerprint to each service provider, while the other is made available to service providers for embedding consumer-specific fingerprints during image generation. Our method does not merely embed two fingerprints within the generated image but instead allows independent control over them at developer level and business level, enabling simultaneous traceability of businesses and consumers. Experiments show that our method applies to various image generation and editing tasks of multiple T2I models, and can achieve over 99.9\% extraction accuracy for both fingerprints. Our …

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

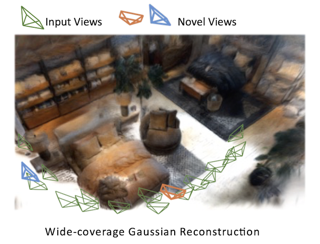

We propose Long-LRM, a feed-forward 3D Gaussian reconstruction model for instant, high-resolution, 360$^\circ$ wide-coverage, scene-level reconstruction. Specifically, it takes in 32 input images at a resolution of $960\times 540$ and produces the Gaussian reconstruction in just 1 second on a single A100 GPU. To handle the long sequence of **250K** tokens brought by the large input size, Long-LRM features a mixture of the recent Mamba2 blocks and the classical transformer blocks, enhanced by a light-weight token merging module and Gaussian pruning steps that balance between quality and efficiency. We evaluate Long-LRM on the large-scale DL3DV benchmark and Tanks&Temples, demonstrating reconstruction quality comparable to the optimization-based methods while achieving an **800**$\times$ speedup w.r.t. the optimization-based approaches and an input size at least **60**$\times$ larger than the previous feed-forward approaches. We conduct extensive ablation studies on our model design choices for both rendering quality and computation efficiency. We also explore Long-LRM's compatibility with other Gaussian variants such as 2D GS, which enhances Long-LRM's ability in geometry reconstruction. Project page: https://longgggglrm.github.io

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Human perceptual systems excel at inducing and recognizing objects across both known and novel categories, a capability far beyond current machine learning frameworks. While generalized category discovery (GCD) aims to bridge this gap, existing methods predominantly focus on optimizing objective functions. We present an orthogonal solution, inspired by the human cognitive process for novel object understanding: decomposing objects into visual primitives and establishing cross-knowledge comparisons. We propose ConGCD, which establishes primitive-oriented representations through high-level semantic reconstruction, binding intra-class shared attributes via deconstruction. Mirroring human preference diversity in visual processing, where distinct individuals leverage dominant or contextual cues, we implement dominant and contextual consensus units to capture class-discriminative patterns and inherent distributional invariants, respectively. A consensus scheduler dynamically optimizes activation pathways, with final predictions emerging through multiplex consensus integration. Extensive evaluations across coarse- and fine-grained benchmarks demonstrate ConGCD's effectiveness as a consensus-aware paradigm.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

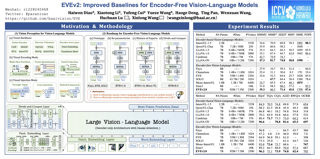

Existing encoder-free vision-language models (VLMs) are rapidly narrowing the performance gap with their encoder-based counterparts, highlighting the promising potential for unified multimodal systems with structural simplicity and efficient deployment. We systematically clarify the performance gap between VLMs using pre-trained vision encoders, discrete tokenizers, and minimalist visual layers from scratch, deeply excavating the under-examined characteristics of encoder-free VLMs. We develop efficient strategies for encoder-free VLMs that rival mainstream encoder-based ones. After an in-depth investigation, we launch EVEv2.0, a new and improved family of encoder-free VLMs. We show that: (i) Properly decomposing and hierarchically associating vision and language within a unified model reduces interference between modalities. (ii) A well-designed training strategy enables effective optimization for encoder-free VLMs. Through extensive evaluation, our EVEv2.0 represents a thorough study for developing a decoder-only architecture across modalities, demonstrating superior data efficiency and strong vision-reasoning capability.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

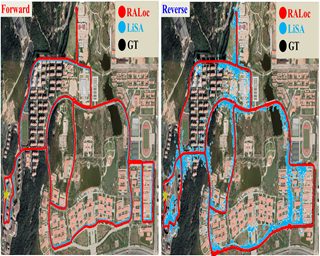

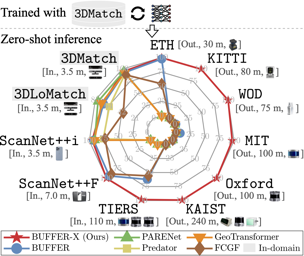

LiDAR localization is a fundamental task in autonomous driving and robotics. Scene Coordinate Regression (SCR) exhibits leading pose accuracy, achieving impressive results in learning-based localization. We observe that the real-world LiDAR scans captured from different viewpoints usually result in the catastrophic collapse of SCR. However, existing LiDAR localization methods have largely overlooked the issue of rotation sensitivity in SCR. In this paper, we present RALoc, an outdoor LiDAR localization method with rotation awareness to achieve accurate localization. The key to our approach is to design a Point Cloud Canonicalization module, which leverages a powerful equivariant key feature aggregation to transform the input LiDAR scan towards a consistent orientation, effectively eliminating the adverse effects of rotation. This proposed module has promising scalability and can be seamlessly integrated with the existing LiDAR localization network. Moreover, we propose the $\textbf{Bi}$directional $\textbf{Li}$DAR $\textbf{Lo}$calization (BiLiLo) dataset as a benchmark to evaluate the performance of various methods in large outdoor scenes with significant rotation changes. Extensive experiments show that RALoc significantly improves localization performance in scenarios with large rotation changes, and also achieves competitive performance in the Oxford Radar RobotCar dataset. Our code and dataset will be released upon acceptance.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

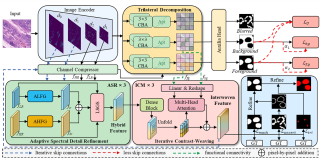

In recent years, it has been found that “grandmother cells” in the primary visual cortex (V1) of macaques can directly recognize visual input with complex shapes. This inspires us to examine the value of these cells in promoting the research of medical image segmentation. In this paper, we design a Similarity Memory Prior Network (Sim-MPNet) for medical image segmentation. Specifically, we propose a Dynamic Memory Weights–Loss Attention (DMW-LA), which matches and remembers the category features of specific lesions or organs in medical images through the similarity memory prior in the prototype memory bank, thus helping the network to learn subtle texture changes between categories. DMW-LA also dynamically updates the similarity memory prior in reverse through Weight-Loss Dynamic (W-LD) update strategy, effectively assisting the network directly extract category features. In addition, we propose the Double-Similarity Global Internal Enhancement Module (DS-GIM) to deeply explore the internal differences in the feature distribution of input data through cosine similarity and euclidean distance. Extensive experiments on four public datasets show that Sim-MPNet has better segmentation performance than other state-of-the-art methods. Our code is available on https://anonymous.4open.science/r/Sim-MPNet.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

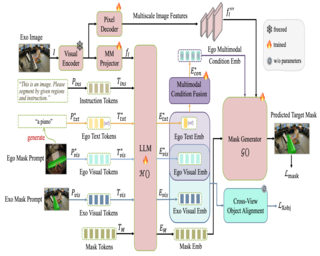

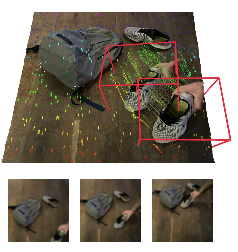

Bridging the gap between ego-centric and exo-centric views has been a long-standing question in computer vision. In this paper, we focus on the emerging Ego-Exo object correspondence task, which aims to understand object relations across ego-exo perspectives through segmentation. While numerous segmentation models have been proposed, most operate on a single image (view), making them impractical for cross-view scenarios. PSALM, a recently proposed segmentation method, stands out as a notable exception with its demonstrated zero-shot ability on this task. However, due to the drastic viewpoint change between ego and exo, PSALM fails to accurately locate and segment objects, especially in complex backgrounds or when object appearances change significantly. To address these issues, we propose ObjectRelator, a novel approach featuring two key modules: Multimodal Condition Fusion (MCFuse) and SSL-based Cross-View Object Alignment (XObjAlign). MCFuse introduces language as an additional cue, integrating both visual masks and textual descriptions to improve object localization and prevent incorrect associations. XObjAlign enforces cross-view consistency through self-supervised alignment, enhancing robustness to object appearance variations. Extensive experiments demonstrate ObjectRelator’s effectiveness on the large-scale Ego-Exo4D benchmark and HANDAL-X (an adapted dataset for cross-view segmentation) with state-of-the-art performance. Codes and models will be released.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Embodied scene understanding requires not only comprehending visual-spatial information that has been observed but also determining where to explore next in the 3D physical world. Existing 3D Vision-Language (3D-VL) models primarily focus on grounding objects in static observations from 3D reconstruction, such as meshes and point clouds, but lack the ability to actively perceive and explore their environment. To address this limitation, we introduce \underline{\textbf{M}}ove \underline{\textbf{t}}o \underline{\textbf{U}}nderstand (\textbf{MTU3D}), a unified framework that integrates active perception with \underline{\textbf{3D}} vision-language learning, enabling embodied agents to effectively explore and understand their environment. This is achieved by three key innovations 1) Online query-based representation learning, enabling direct spatial memory construction from RGB-D frames, eliminating the need for explicit 3D reconstruction. 2) A unified objective for grounding and exploration that represents unexplored locations as frontier queries and jointly optimizes object grounding and frontier selection. 3) End-to-end trajectory learning that combines \textbf{V}ision-\textbf{L}anguage-\textbf{E}xploration pre-training over a million diverse trajectories collected from both simulated and real-world RGB-D sequences. Extensive evaluations across various embodied navigation and question-answering benchmarks show that MTU3D outperforms state-of-the-art reinforcement learning and modular navigation approaches by 14\%, 27\%, 11\%, and 3\% in success rate on HM3D-OVON, GOAT-Bench, SG3D, and A-EQA, respectively. MTU3D's versatility enables navigation …

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

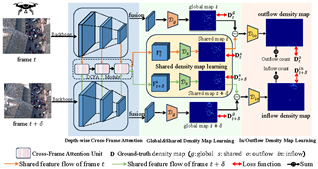

Video Individual Counting (VIC) has received increasing attentions recently due to its importance in intelligent video surveillance. Existing works are limited in two aspects, i.e., dataset and method. Previous crowd counting datasets are captured with fixed or rarely moving cameras with relatively sparse individuals, restricting evaluation for a highly varying view and time in crowded scenes. While VIC methods have been proposed based on localization-then-association or localization-then-classification, they may not perform well due to difficulty in accurate localization of crowded and small targets under challenging scenarios. To address these issues, we collect a MovingDroneCrowd Dataset and propose a density map based VIC method. Different from existing datasets, our dataset consists of videos captured by fast-moving drones in crowded scenes under diverse illuminations, shooting heights and angles. Other than localizing individuals, we propose a Depth-wise Cross-Frame Attention (DCFA) module, which directly estimate inflow and outflow density maps to learn shared density between consecutive frames. The inflow density maps across frames are summed up to obtain the number of unique pedestrians in a video. Experiments on our datasets and publicly available ones the the superiority of our method over the state of the arts for VIC in highly dynamic and complex crowded …

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

We present OminiControl, a novel approach that rethinks how image conditions are integrated into Diffusion Transformer (DiT) architectures. Current image conditioning methods either introduce substantial parameter overhead or handle only specific control tasks effectively, limiting their practical versatility. OminiControl addresses these limitations through three key innovations: (1) a minimal architectural design that leverages the DiT's own VAE encoder and transformer blocks, requiring just 0.1\% additional parameters; (2) a unified sequence processing strategy that combines condition tokens with image tokens for flexible token interactions; and (3) a dynamic position encoding mechanism that adapts to both spatially-aligned and non-aligned control tasks. Our extensive experiments show that this streamlined approach not only matches but surpasses the performance of specialized methods across multiple conditioning tasks. To overcome data limitations in subject-driven generation, we also introduce Subjects200K, a large-scale dataset of identity-consistent image pairs synthesized using DiT models themselves. This work demonstrates that effective image control can be achieved without architectural complexity, opening new possibilities for efficient and versatile image generation systems.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Diffusion models like Stable Diffusion have become prominent in visual synthesis tasks due to their powerful customization capabilities. However, these capabilities also introduce significant security risks, such as deepfakes and copyright infringement. To mitigate these risks, a class of methods known as protective perturbation emerged, which prevents image misuse by injecting imperceptible adversarial noise.On the other hand, purification methods can effectively remove the protective perturbation, thereby exposing images again to the risk of malicious forgery.In this work, we formalize the anti-purification task, highlighting the challenges that existing approaches can not address properly, and propose a solution named **AntiPure**.AntiPure is robust against the "purification-customization'' workflow, owing to the two types of proposed guidance: 1) Patch-wise Frequency Guidance, which reduces the model’s influence over high-frequency components in the purified image, and 2) Erroneous Timestep Guidance, which disrupts the model’s denoising strategy across different timesteps.With additional guidance, AntiPure embeds imperceptible perturbation patterns resistant to purification, achieving effective output distortion after customization. Experiments show that our approach achieves minimal perceptual discrepancy, maximal distortion, and robust performance, outperforming current protective perturbation methods within the purification-customization workflow.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

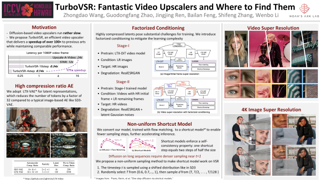

Diffusion-based generative models have demonstrated exceptional promise in super-resolution (SR) tasks, achieving a substantial advancement in detail generation relative to prior methods. However, these approaches face significant computational efficiency challenges. When the input is video, the problem becomes even more pronounced. For instance, current techniques may require tens of minutes to super-resolve a mere 2-second, 1080p video. In this paper, we present TurboVSR, an ultra-efficient diffusion-based video super-resolution model. Our core design comprises three key aspects: **(1)** We employ an autoencoder with a high compression ratio of 32$\times$32$\times$8 to reduce the number of tokens. **(2)** Highly compressed latents pose substantial challenges for training. We introduce factorized conditioning to mitigate the learning complexity: we first learn to super-resolve the initial frame; subsequently, we condition the super-resolution of the remaining frames on the high-resolution initial frame and the low-resolution subsequent frames. **(3)** We convert the pre-trained diffusion model to a shortcut model to enable fewer sampling steps, further accelerating inference.As a result, TurboVSR performs on par with state-of-the-art VSR methods, while being 100+ times faster, taking only 7 seconds to process a 2-second long 1080p video. TurboVSR also supports image resolution by considering image as a one-frame video. Our efficient design makes …

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

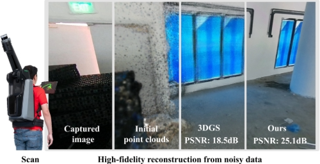

Neural surface reconstruction faces critical challenges in achieving geometrically accurate and visually coherent results under complex real-world conditions. We present a unified framework that simultaneously resolves multi-view radiance inconsistencies, enhances low-textured surface recovery, and preserves fine structural details through three fundamental innovations. First, our SDF-guided visibility factor $\mathbb{V}$ establishes continuous occlusion reasoning to eliminate reflection-induced ambiguities in multi-view supervision. Second, we introduce local geometry constraints via ray-aligned patch analysis $\mathbb{P}$, enforcing planarity in textureless regions while maintaining edge sensitivity through adaptive feature weighting. Third, we reformulate Eikonal regularization with rendering-prioritized relaxation, enabling detail preservation by conditioning geometric smoothness on local radiance variations. Unlike prior works that address these aspects in isolation, our method achieves synergistic optimization where multi-view consistency, surface regularity, and structural fidelity mutually reinforce without compromise. Extensive experiments across synthetic and real-world datasets demonstrate state-of-the-art performance, with quantitative improvements of 21.4\% in Chamfer distance over reflection-aware baselines and 2.32 dB PSNR gains against neural rendering counterparts. Qualitative results showcase unprecedented reconstruction quality for challenging cases including specular instruments, urban layouts with thin structures, and Lambertian surfaces with sub-millimeter details. Our code will be publicly released to facilitate research in unified neural surface recovery.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

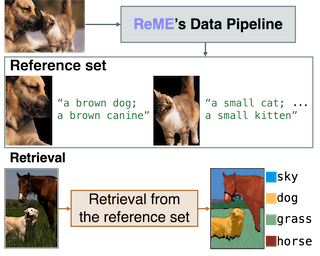

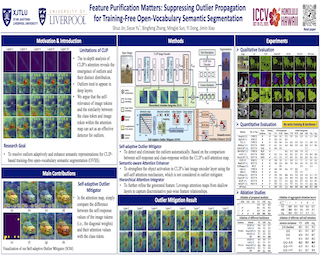

Training-free open-vocabulary semantic segmentation (OVS) aims to segment images given a set of arbitrary textual categories without costly model fine-tuning. Existing solutions often explore attention mechanisms of pre-trained models, such as CLIP, or generate synthetic data and design complex retrieval processes to perform OVS. However, their performance is limited by the capability of reliant models or the suboptimal quality of reference sets. In this work, we investigate the largely overlooked data quality problem for this challenging dense scene understanding task, and identify that a high-quality reference set can significantly benefit training-free OVS. With this observation, we introduce a data-quality-oriented framework, comprising a data pipeline to construct a reference set with well-paired segment-text embeddings and a simple similarity-based retrieval to unveil the essential effect of data. Remarkably, extensive evaluations on ten benchmark datasets demonstrate that our method outperforms all existing training-free OVS approaches, highlighting the importance of data-centric design for advancing OVS without training.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Monocular dynamic reconstruction is a challenging and long-standing vision problem due to the highly ill-posed nature of the task. Existing approaches depend on templates, are effective only in quasi-static scenes, or fail to model 3D motion explicitly. We introduce a method for reconstructing generic dynamic scenes, featuring explicit, persistent 3D motion trajectories in the world coordinate frame, from casually captured monocular videos.We tackle the problem with two key insights: First, we exploit the low-dimensional structure of 3D motion by representing scene motion with a compact set of SE(3) motion bases. Each point's motion is expressed as a linear combination of these bases, facilitating soft decomposition of the scene into multiple rigidly-moving groups. Second, we take advantage of off-the-shelf data-driven priors such as monocular depth maps and long-range 2D tracks, and devise a method to effectively consolidate these noisy supervisory signals, resulting in a globally consistent representation of the dynamic scene. Experiments show that our method achieves state-of-the-art performance for both long-range 3D/2D motion estimation and novel view synthesis on dynamic scenes.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

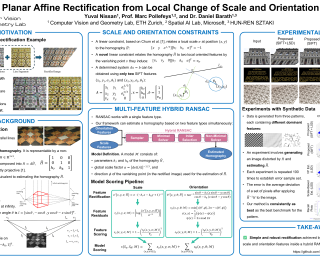

We propose a method for affine rectification of an image plane by leveraging changes in local scales and orientations under projective distortion. Specifically, we derive a novel linear constraint that directly relates pairs of points with orientations to the parameters of a projective transformation. This constraint is combined with an existing linear constraint on local scales, leading to highly robust rectification. The method reduces to solving a system of linear equations, enabling an efficient algebraic least-squares solution. It requires only two local scales and two local orientations, which can be extracted from, e.g., SIFT features. Unlike prior approaches, our method does not impose restrictions on individual features, does not require class segmentation, and makes no assumptions about feature interrelations. It is compatible with any feature detector that provides local scale or orientation. Furthermore, combining scaled and oriented points with line segments yields a highly robust algorithm that outperforms baselines. Extensive experiments show the effectiveness of our approach on real-world images, including repetitive patterns, building facades, and text-based content.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

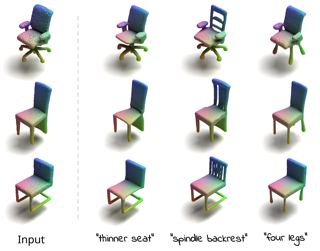

Recent advances in diffusion models have significantly improved image generation and editing, but extending these capabilities to 3D assets remains challenging, especially for fine-grained edits that require multi-view consistency. Existing methods typically restrict editing to predetermined viewing angles, severely limiting their flexibility and practical applications.We introduce Edit360, a tuning-free framework that extends 2D modifications to multi-view consistent 3D editing. Built upon video diffusion models, Edit360 enables user-specific editing from arbitrary viewpoints while ensuring structural coherence across all views. The framework selects anchor views for 2D modifications and propagates edits across the entire 360-degree range. To achieve this, Edit360 introduces a novel Anchor-View Editing Propagation mechanism, which effectively aligns and merges multi-view information within the latent and attention spaces of diffusion models. The resulting edited multi-view sequences facilitate the reconstruction of high-quality 3D assets, enabling customizable 3D content creation.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

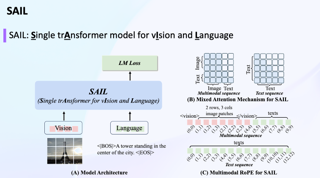

This paper introduces SAIL, a single transformer unified multimodal large language model (MLLM) that integrates raw pixel encoding and language decoding within a singular architecture. Unlike existing modular MLLMs, which rely on a pre-trained vision transformer (ViT), SAIL eliminates the need for a separate vision encoder, presenting a more minimalist architecture design. Instead of introducing novel architectural components, SAIL adapts mix-attention mechanisms and multimodal positional encodings to better align with the distinct characteristics of visual and textual modalities. We systematically compare SAIL's properties-including scalability, cross-modal information flow patterns, and visual representation capabilities-with those of modular MLLMs. By scaling both training data and model size, SAIL achieves performance comparable to modular MLLMs. Notably, the removal of pretrained ViT components enhances SAIL's scalability and results in significantly different cross-modal information flow patterns. Moreover, SAIL demonstrates strong visual representation capabilities, achieving results on par with ViT-22B in vision tasks such as semantic segmentation. Code and models will be released.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

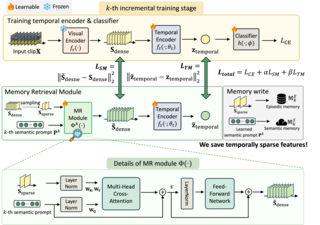

In this work, we tackle the problem of video class-incremental learning (VCIL). Many existing VCIL methods mitigate catastrophic forgetting by rehearsal training with a few temporally dense samples stored in episodic memory, which is memory-inefficient. Alternatively, some methods store temporally sparse samples, sacrificing essential temporal information and thereby resulting in inferior performance.To address this trade-off between memory-efficiency and performance, we propose EpiSodic and SEmaNTIc memory integrAtion for video class-incremental Learning (ESSENTIAL).We are inspired by the human memory system, which integrates episodic and semantic memory for accurate information retrieval.ESSENTIAL consists of episodic memory for storing temporally sparse features and semantic memory for storing general knowledge represented by learnable prompts.We introduce a novel memory retrieval (MR) module that integrates episodic and semantic memory through cross-attention, enabling the retrieval of temporally dense features from temporally sparse features.We rigorously validate ESSENTIAL on diverse datasets: UCF-101, HMDB51, and Something-Something-V2 from the TCD benchmark and UCF-101, ActivityNet, and Kinetics-400 from the vCLIMB benchmark.Remarkably, with significantly reduced memory, ESSENTIAL achieves favorable performance on the benchmarks.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Recently, open-vocabulary semantic segmentation has garnered growing attention. Most current methods leverage vision-language models like CLIP to recognize unseen categories through their zero-shot capabilities. However, CLIP struggles to establish potential spatial dependencies among scene objects due to its holistic pre-training objective, causing sub-optimal results. In this paper, we propose a DEnoising learning framework based on the Diffusion model for Open-vocabulary semantic Segmentation, called DEDOS, which is aimed at constructing the scene skeleton. Motivation stems from the fact that diffusion models incorporate not only the visual appearance of objects but also embed rich scene spatial priors. Our core idea is to view images as labels embedded with "noise"—non-essential details for perceptual tasks—and to disentangle the intrinsic scene prior from the diffusion feature during the denoising process of the images. Specifically, to fully harness the scene prior knowledge of the diffusion model, we introduce learnable proxy queries during the denoising process. Meanwhile, we leverage the robustness of CLIP features to texture shifts as supervision, guiding proxy queries to focus on constructing the scene skeleton and avoiding interference from texture information in the diffusion feature space. Finally, we enhance spatial understanding within CLIP features using proxy queries, which also serve as an interface …

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Vision-language tracking aims to locate the target object in the video sequence using a template patch and a language description provided in the initial frame. To achieve robust tracking, especially in complex long-term scenarios that reflect real-world conditions as recently highlighted by MGIT, it is essential not only to characterize the target features but also to utilize the context features related to the target. However, the visual and textual target-context cues derived from the initial prompts generally align only with the initial target state. Due to their dynamic nature, target states are constantly changing, particularly in complex long-term sequences. It is intractable for these cues to continuously guide Vision-Language Trackers (VLTs). Furthermore, for the text prompts with diverse expressions, our experiments reveal that existing VLTs struggle to discern which words pertain to the target or the context, complicating the utilization of textual cues. In this work, we present a novel tracker named ATCTrack, which can obtain multimodal cues Aligned with the dynamic target states through comprehensive Target-Context feature modeling, thereby achieving robust tracking. Specifically, (1) for the visual modality, we propose an effective temporal visual target-context modeling approach that provides the tracker with timely visual cues. (2) For the textual …

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

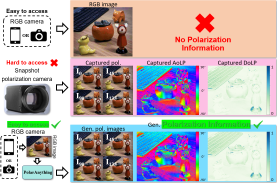

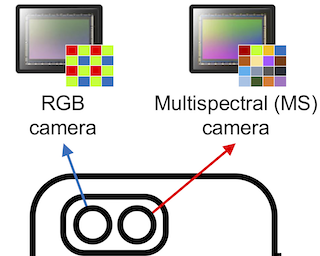

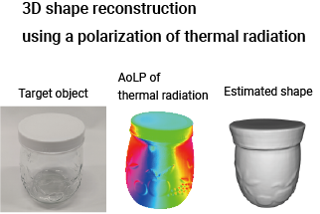

Polarization images facilitate image enhancement and 3D reconstruction tasks, but the limited accessibility of polarization cameras hinders their broader application. This gap drives the need for synthesizing photorealistic polarization images. The existing polarization simulator Mitsuba relies on a parametric polarization image formation model and requires extensive 3D assets covering shape and PBR materials, preventing it from generating large-scale photorealistic images. To address this problem, we propose PolarAnything, capable of synthesizing polarization images from a single RGB input with both photorealism and physical accuracy, eliminating the dependency on 3D asset collections. Drawing inspiration from the zero-shot performance of pretrained diffusion models, we introduce a diffusion-based generative framework with an effective representation strategy that preserves the fidelity of polarization properties. Extensive experiments show that our model not only generates high-quality polarization images but also effectively supports downstream tasks such as shape from polarization.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Miniaturized endoscopy has advanced accurate visual perception within the human body. Prevailing research remains limited to conventional cameras employing convex lenses, where the physical constraints with millimetre-scale thickness impose serious impediments on the micro-level clinical. Recently, with the emergence of meta-optics, ultra-micro imaging based on metalenses (micron-scale) has garnered great attention, serving as a promising solution. However, due to the physical difference of metalens, there is a large gap in data acquisition and algorithm research. In light of this, we aim to bridge this unexplored gap, advancing the novel metalens endoscopy. First, we establish datasets for metalens endoscopy and conduct preliminary optical simulation, identifying two derived optical issues that physically adhere to strong optical priors. Second, we propose MetaScope, a novel optics-driven neural network tailored for metalens endoscopy driven by physical optics. MetaScope comprises two novel designs: Optics-informed Intensity Adjustment (OIA), rectifying intensity decay by learning optical embeddings, and Optics-informed Chromatic Correction (OCC), mitigating chromatic aberration by learning spatial deformations informed by learned Point Spread Function (PSF) distributions. To enhance joint learning, we deploy a gradient-guided distillation to adaptively transfer knowledge from the foundational model. Extensive experiments demonstrate that our method surpasses state-of-the-art methods in metalens segmentation and restoration by …

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Leveraging the effective visual-text alignment and static generalizability from CLIP, recent video learners adopt CLIP initialization with further regularization or recombination for generalization in open-vocabulary action recognition in-context. However, due to the static bias of CLIP, such video learners tend to overfit on shortcut static features, thereby compromising their generalizability, especially to novel out-of-context actions. To address this issue, we introduce $\textbf{Open-MeDe}$, a novel Meta-optimization framework with static Debiasing for Open-vocabulary action recognition. From a fresh perspective of generalization, Open-MeDe adopts a meta-learning approach to improve $\textbf{known-to-open generalizing}$ and $\textbf{image-to-video debiasing}$ in a cost-effective manner. Specifically, Open-MeDe introduces a cross-batch meta-optimization scheme that explicitly encourages video learners to quickly generalize to arbitrary subsequent data via virtual evaluation, steering a smoother optimization landscape. In effect, the free of CLIP regularization during optimization implicitly mitigates the inherent static bias of the video meta-learner. We further apply self-ensemble over the optimization trajectory to obtain generic optimal parameters that can achieve robust generalization to both in-context and out-of-context novel data. Extensive evaluations show that Open-MeDe not only surpasses state-of-the-art regularization methods tailored for in-context open-vocabulary action recognition but also substantially excels in out-of-context scenarios.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Adversarially robust knowledge distillation transfers the robustness of a large-scale teacher model to a lightweight student while preserving natural performance. However, foundation Vision-Language Models (VLMs) also demand the transfer of zero-shot inference capabilities. We find that standard robust distillation using untargeted adversarial examples fails to transfer out-of-distribution (zero-shot) robustness, as these adversaries primarily push inputs away from their original distribution, exploring a limited portion of the teacher’s decision space and miss more diverse failure modes. A natural solution is to generate multiple targeted adversaries that traverse diverse paths across decision boundaries. Thus, these adversaries probe a broader region of the teacher’s decision surface. However, naive targeted adversary optimization often converges to local optima within a single category’s decision region, limiting the diversity. To address this, we propose a Multi-Objective Optimization (MOO)-based adversarial distillation framework that transfers robustness from large VLMs to lightweight ones by exploiting adversaries with two main objectives: misclassification and category-level adversarial diversity. Theoretically, we show that optimizing for diversity mitigates adversarial collapse into local optima, ensuring adversaries span multiple decision regions and capture the teacher’s generalizable robust features. Extensive experiments demonstrate the superiority of our method over state-of-the-art adversarial learning across diverse scenarios.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract

Text-to-Image (T2I) Diffusion Models have achieved remarkable performance in generating high quality images. However, enabling precise control of continuous attributes, especially multiple attributes simultaneously, in a new domain (e.g., numeric values like eye openness or car width) with text-only guidance remains a significant challenge. To address this, we introduce the **Attribute (Att) Adapter**, a novel plug-and-play module designed to enable fine-grained, multi-attributes control in pretrained diffusion models. Our approach learns a single control adapter from a set of sample images that can be unpaired and contain multiple visual attributes. The Att-Adapter leverages the decoupled cross attention module to naturally harmonize the multiple domain attributes with text conditioning.We further introduce Conditional Variational Autoencoder (CVAE) to the Att-Adapter to mitigate overfitting, matching the diverse nature of the visual world.Evaluations on two public datasets show that Att-Adapter outperforms all LoRA-based baselines in controlling continuous attributes. Additionally, our method enables a broader control range and also improves disentanglement across multiple attributes, surpassing StyleGAN-based techniques. Notably, Att-Adapter is flexible, requiring no paired synthetic data for training, and is easily scalable to multiple attributes within a single model.

|

|

Highlight

|

Poster

[ Exhibit Hall I ]

Abstract